Exact Median calculation in Impala

Author: Marcel van den Bosch Date: 22-nov-2019

If you have found this post, you have probably discovered that Cloudera’s Impala, Hive or Apache Spark, is lacking a bit of the out-of-the-box support for calculating the exact Median of a column. Unfortunately, Impala only offers a function that calculated the approximation of the Median. This is done use the APPX_MEDIAN function. In Hive, you can calculate the exact median – but only for integer (whole numbers) values. For example, you could do this in Hive:

select percentile(cast(my_measurement as BIGINT), 0.5) from table_name

If you have a specific use-case where the exact median value is required, you are out of luck! I believe the main reason for this lack of support, is the fact that it is an expensive operation. Also, in many big data scenarios an approximation (as is supported by Impala and Spark) is often quite sufficient for most use-cases.

A colleague of mine reminded me of the fact that the calculation is quite simple (as we learned at school):

- Arrange the numbers in order by size

- If there is an odd number of terms, the median is the center term.

- If there is an even number of terms, add the two middle terms and divide by 2

Approach 1:

- Nested-query (ids)that retrieves the numerical values (measurements) and adds a row_number to it, which is ordered by the values ascending (= rank).

- Nested-query (groups) that retrieves the max. number of observations for a specific group of measurement

- An inner join that relate the individual measurements (and their rank) with the maximum number of observations

- In the where-clause, we select the middle row if there is an odd number of values. If there is an even number of values, we select the middle two.

- A base query, which averages the measurement value and groups it accordingly. Note: that in case of an odd number of values, the average function only averages over 1 value. When there is an even number of values, it averages the middle two values.

It is possible to further simplify this, using one nested query that leverages a count() function over a partition window. This would result in the following alternative approach.

It is possible to further simplify this, using one nested query that leverages a count() function over a partition window. This would result in the following alternative approach.

Approach 2:

- Nested-query (ids) that retrieves the numerical values (measurements) and adds a row_number to it, which is ordered by the values ascending (= rank) and total number of observations (=total rows)

- A base query with where-clause, which averages the measurement value and groups it accordingly. Note: that in case of an odd number of values, the average function only averages over 1 value. When there is an even number of values, it averages the middle two values.

As you can imagine, this concept is not the most efficient in terms of performance. However, running it on Hadoop allows for some horizontal scalability. However, if you have recommendations for improving the performance, please drop a comment below!

Example for approach 1:

See the below example as a practical implementation for approach 1:

SELECT ids.lot

,ids.program

,ids.parameter

,avg(ids.measured_value) AS MedianValue

FROM (

SELECT q.lot

,q.program

,q.parameter

,q.measured_value

,ROW_NUMBER() OVER (

PARTITION BY q.lot

,q.program

,q.parameter ORDER BY q.measured_value ASC

) AS rn

FROM mfg_apps.base_qualifications q

WHERE q.p_aggregation_type = 'site'

) ids

INNER JOIN (

SELECT q.lot

,q.program

,q.parameter

,(count(*)) / 2 AS mid_row

,count(*) AS total_rows

FROM mfg_apps.base_qualifications q

WHERE q.p_aggregation_type = 'site'

GROUP BY q.lot

,q.program

,q.parameter

) groups ON ids.lot = groups.lot

AND ids.program = groups.program

AND ids.parameter = groups.parameter

WHERE (

NOT (groups.total_rows % 2 = 0)

AND ids.rn = ceil(groups.mid_row)

)

OR (

groups.total_rows % 2 = 0

AND (

ids.rn = ceil(groups.mid_row)

OR ids.rn = ceil(groups.mid_row) + 1

)

)

GROUP BY ids.lot

,ids.program

,ids.parameter

Example for approach 2:

Below SQL-code illustrates a practical example for approach 2, where we were able to simplify the query using a count() window function and slightly improve performance:

SELECT ids.lot

,ids.program

,ids.parameter

,avg(ids.measured_value) AS MedianValue

FROM (

SELECT q.lot

,q.program

,q.parameter

,q.measured_value

,ROW_NUMBER() OVER (

PARTITION BY q.lot

,q.program

,q.parameter ORDER BY q.measured_value ASC

) AS rn

,COUNT() OVER (

PARTITION BY q.lot

,q.program

,q.parameter

) AS total_rows

FROM mfg_apps.base_qualifications q

WHERE q.p_aggregation_type = 'site'

) ids

WHERE (

NOT (ids.total_rows % 2 = 0)

AND (ids.rn - ceil(ids.total_rows / 2) = 0)

)

OR (

(ids.total_rows % 2 = 0)

AND (

ids.rn = floor(ids.total_rows / 2)

OR ids.rn = floor(ids.total_rows / 2) + 1

)

)

GROUP BY ids.lot

,ids.program

,ids.parameter;

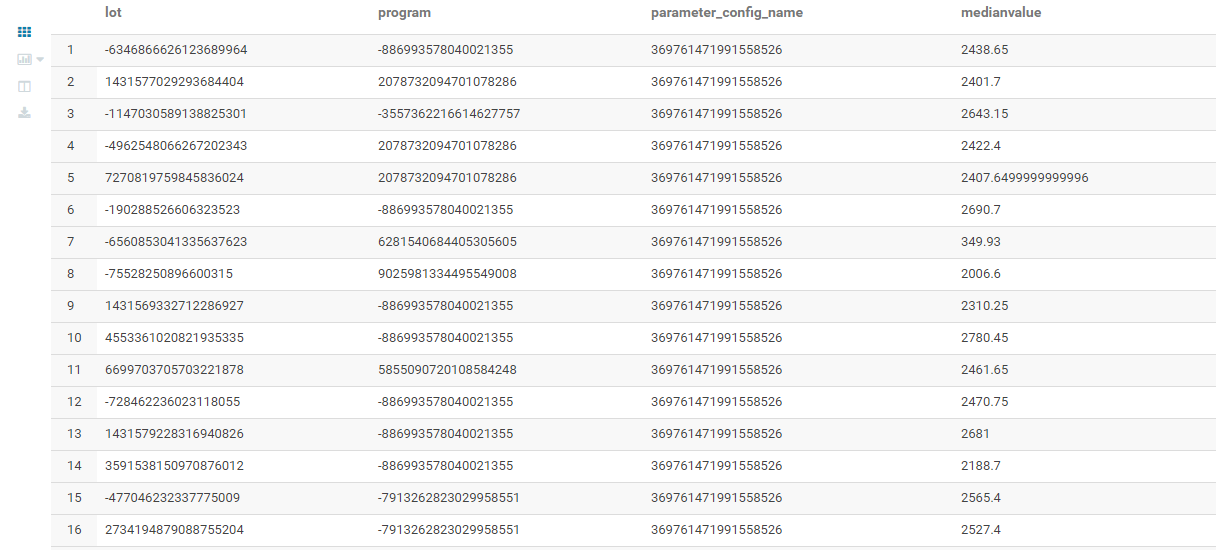

Looking at the results